delphai and Intel entered into a partnership to work closely together on enhancing delphai’s ML/DL models with Intel’s state-of-the-art hardware and software solutions.

Structuring the unstructured global economy can be time consuming and expensive. By using Intel Neural Compressor (INC), part of the Intel oneAPI AI Toolkit, Intel extension for PyTorch, and Deep Learning Boost Technology on Intel Xeon 3rd generation scalable Processors, delphai was able to accelerate its NLP models’ inference for our B2B company search engine and AI enrichment suite.

Optimizing firmographic fusion

After going through a highly competitive and diligent selection process, Intel chose delphai as a partner in July 2022. This partnership promotes joint advice and collaboration between our MLOps and Intel’s teams. We began our cooperation with our MLOps Engineer Malek and CTO Eugene along with Intel’s Field Application Engineer Albertano and AI Software Development Engineer Alexander, reviewing which aspects of delphai’s firmographic fusion platform could be best optimized with Intel’s innovative software and hardware solutions.

One of the challenges we have faced at delphai is serving our heavy deep-learning models and maintaining them in production with <1% downtime. State-of-the-art architectures and models that we fine-tune and enhance usually have high inference times to ensure we get the most accurate predictions. We’ve been using GPUs to serve these models as they are much faster than CPUs, but this comes with a great cost.

Enhancing delphai’s existing AI-NLP models

The team decided that Intel’s solutions would be best suited to enhance our existing AI-NLP multilingual translation and text classification models and help us to reduce costs. Our translation model allows delphai to access and structure firmographic data from any language on earth. We ingest thousands of foreign press releases and news articles every day in German, Chinese, Japanese, and more. By translating the articles, we enable delphai customers to gain business insights from local texts, which often provide better and earlier recognition of economic trends. delphai’s industry classification model allows us to assign industry labels to companies using our breadth of contextual data. By structuring and organizing the firmographic data on any company, our models can easily identify what industry a company is in – from Automotive to Forestry to Healthcare. This enabled us to improve our train-your-own-model service, where delphai’s AI rapidly identifies any business’s idiosyncratic labeling structure and can apply it to thousands of profiles within your own database or CRM. With Intel’s software and hardware, our train-your-own-models are able to learn from unique industry labels faster with only a minor drop in accuracy.

Groundbreaking firmographics – provided by delphai – powered by Intel

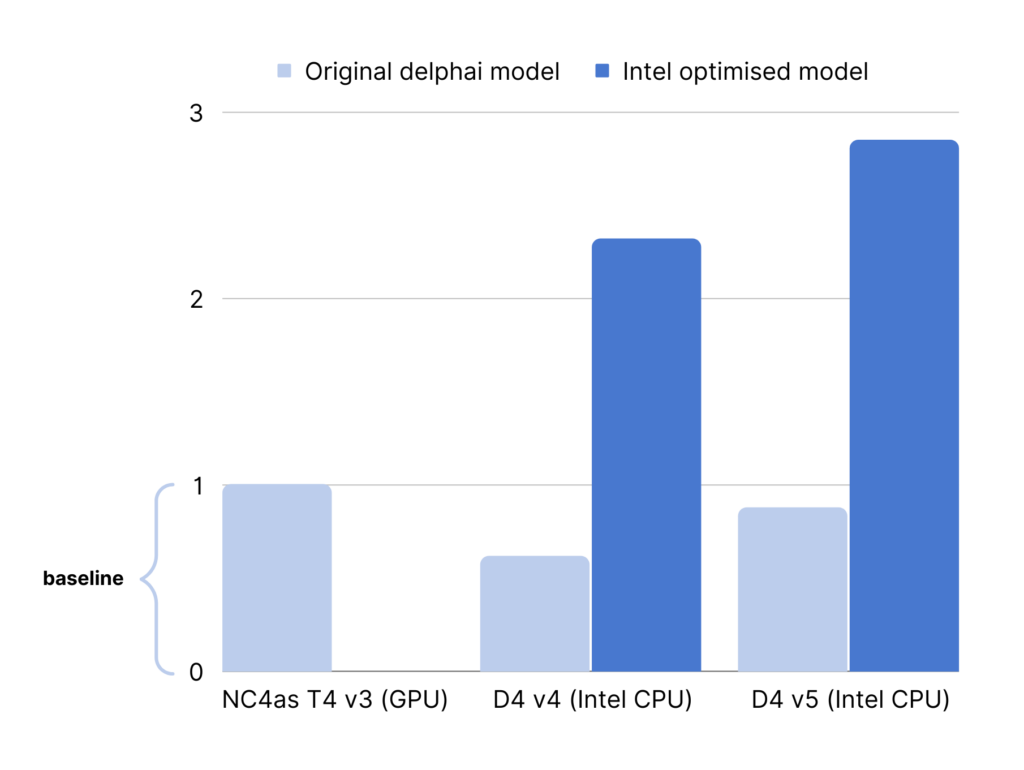

The partnership has been defined by a close technical collaboration between the development teams at delphai and Intel. Working with Intel allowed us to get more value out of the models that we already had. Together, we collaborated on optimizing and further developing delphai’s current models. Traditionally, ML models running on CPUs are slower at producing results than those running on GPUs. However, with Intel’s hardware, our models are able to increase inference speed and reduce costs more efficiently than would have been possible if we had only used more GPUs. In fact, this gave us a performance per € increase of nearly 3x. Ultimately, this allows us to provide more relevant, high-quality, structured firmographic data to our customers.

More, better, faster company intelligence

Our partnership with Intel was fantastic and ran very smoothly as we were navigating complex and dynamic infrastructure. Intel’s team provided us with all the right resources to improve our processes and was always available for advice and support. Since deploying our updated models, bolstered by Intel’s hardware and software solutions, back in July, we improved our performance for our multilingual translation model as shown in the graph below. Azure’s Dv5 VMs powered with Intel Xeon 3rd generation scalable Processors showed the best performance (per €) and that’s why we deployed it into production. With Intel’s INC, Intel extension for PyTorch, and the right Intel hardware, we were able to process just as much data but for a much cheaper price.

Download a copy of the whitepaper by delphai and Intel, “How to Accelerate a Business to Business (B2B) Company Search Engines using Intel Optimizations for Deep Learning and Improving Total Cost of Ownership” below!

Curious to learn more? Book your demo here